H-GAN: the power of GANs in your Hands

Oprea, Sergiu,

Karvounas, Giorgos,

Martinez-Gonzalez, Pablo,

Kyriazis, Nikolaos,

Orts-Escolano, Sergio,

Oikonomidis, Iason,

Garcia-Garcia, Alberto,

Tsoli, Aggeliki,

Garcia-Rodriguez, Jose,

and Argyros, Antonis

In 2021 International Joint Conference on Neural Networks (IJCNN)

2021

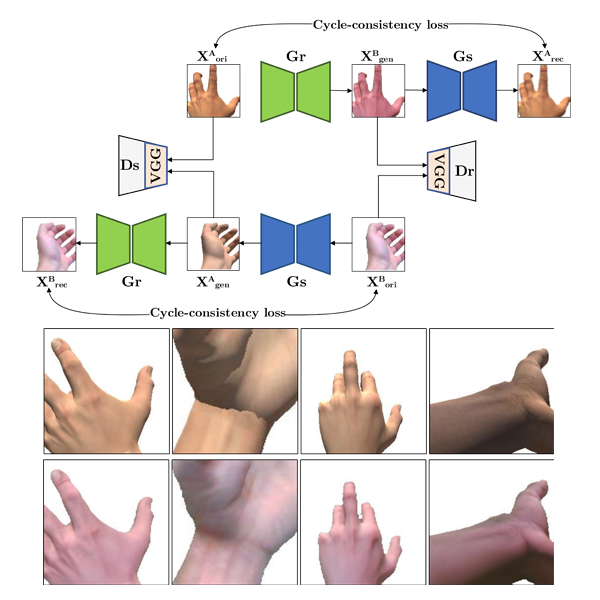

We present HandGAN (H-GAN), a cycle-consistent adversarial learning approach implementing multi-scale perceptual discriminators. It is designed to translate synthetic images of hands to the real domain. Synthetic hands provide complete ground-truth annotations, yet they are not representative of the target distribution of real-world data. We strive to provide the perfect blend of a realistic hand appearance with synthetic annotations. Relying on image-to-image translation, we improve the appearance of synthetic hands to approximate the statistical distribution underlying a collection of real images of hands. H-GAN tackles not only cross-domain tone mapping but also structural differences in localized areas such as shading discontinuities. Results are evaluated on a qualitative and quantitative basis improving previous works. Furthermore, we successfully apply the generated images to the hand classification task.

![]() if you have any questions.

if you have any questions.